When we see a performer playing their instrument, the information flow is usually like this: the performer (duly trained and instructed in their instrument) performs an action (for example, pressing the space between two frets on an electric guitar and making a string vibrate through the movement of a pick), and this action is transmitted by the instrument, which, with the help of the capsules, will generate a voltage that is proportional to the oscillation of the string. Then, it will transmit it through a cable, transferring this information to the amplifier, which will “process” the information input in order to make this voltage audible by increasing its amplitude and making the loudspeakers to which it is connected vibrate.

In the same way, in many interactive performances that occur in real time (such as Emovere) there is one or several performers reproducing a similar flow of information, where the electric guitar is replaced by one or several sensors and the amplifier is replaced by one or several computers and a sound system that can range from an audio interface and small loudspeakers to a multichannel system of one chamber.

What these two examples have in common is the fact that they have an input, a processing stage and an output, which from now on we will refer to as IPO systems (input, processing and output).

In the case of Emovere, the system’s input has 16 sensors that deliver data from the physiological parameters (heartbeat and muscle tension in different parts of the body) of 4 performers, which ultimately means receiving 4,000 data fragments per second (each sensor operates at 250Hz) that must be pre-processed in order to extract the relevant information and eliminate the noise inherent to the circuits involved. Once pre-processed, the data must be correctly connected to the adequate processing units within the interaction platform specifically designed for the piece, which could be perfectly used in other performances that follow the IPO model.

Interaction Platform

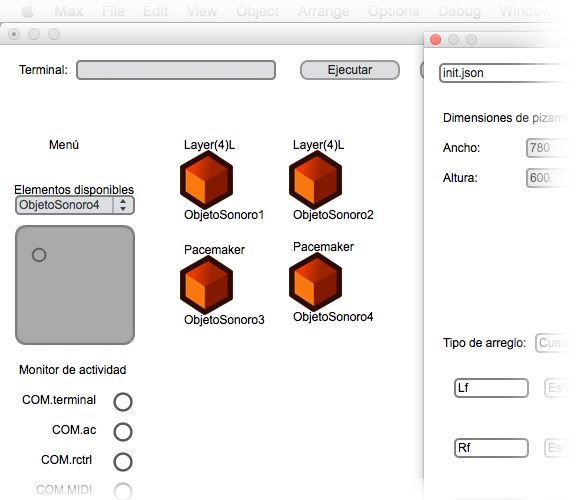

In turn, the interaction platform itself can be considered an IPO system: it receives the data already pre-processed and clean (input), processes it through multiple Max/MSP patches (system in which the platform is programed), which from now on will be known as Sound Objects (SOs, the system’s processing unit), and generates one or several outputs that produce sounds and sometimes also data (output).

Besides being an IPO, the interaction platform provides the necessary functions to manage the different SOs: create an SO, eliminate an SO, save the current system configuration in order to name it later and avoid following this procedure step by step every time it is used. In addition, it is also possible to manage the audio outputs through the platform and save configurations that can range from the classic stereophonic configuration to configurations for a higher number of speakers, such as quadraphonic and surround systems that involve more speakers.

As for the management of the SOs’ audio mix, it is possible to do it in real time using a MIDI controller, and it is also possible to save the controller’s CC (Control Change) assignments.

The Sound Object (SO)

As noted above, the Sound Object (SO) is the processing unit existing within the interaction platform. Each Sound Object is composed of multiple algorithms developed in Max/MSP, which are designed to process the input data and produce sound through one or multiple output channels and, in some cases, also produce data.

Each Sound Object is separated from the interaction platform, except for a minimum number of parameters that need to be read by it in order to adequately manage the set of SOs. The aforementioned provides a significant degree of freedom when programing the SOs in order to develop the desired processing for the input data without being bound by rules or limitations beyond the processing power of the computer that hosts the interaction platform.

For Emovere, several SOs were developed and grouped in different Interaction Modes, which are focused on different parts of the piece and types of sensors. Some examples are explained below:

– In the overture, the performers record texts in front of a microphone and these texts are subsequently reproduced in a granulated manner (disorganized and in length fractions that are generally much lower than the total length of the recording). The parameters that control how the reproduction of the file is disorganized are mediated by the muscle tension of the dancers’ limbs.

– In the act that comes after the overture, there is a part dedicated to the emotion of anger. In this section, the performers reproduce previously recorded and edited sounds that portray this emotion. The reproduction of these sounds only responds to abrupt movements, and the spatial distribution of these sounds within the room is mediated by the same movements.

– In the third and last act of the piece, there are various sound banks that are reproduced in a polyphonic manner, and the frequency of the reproduction is always a multiple of the heart rate of one or more performers. In order to reinforce the sound being heard, there are also visual resources mediated by the same type of data. The total construction of both resources turns out to be an amplification of the dancers’ heart, but with the artistic mediation inherent to Emovere’s proposal.

Managing the Resources of the Computer

As the number of inputs, processing units and outputs of the system increase, the task of obtaining a good sound result and managing the mix throughout the entire performance becomes increasingly difficult. For Emovere, a total of 58 audio channels and 37 SOs were used during the almost 60 minutes of the piece. In order to support all the processing involved, certain precautions must be taken, which are explained below:

1. CPU Management

Although 58 channels and 37 SOs were used during the piece, these channels and objects were not processing information during each of the 60 minutes of Emovere. Due to the amount of data per unit of time being processed, it wasn’t appropriate to create the objects right before using them, as the functions associated to the creation of objects would interrupt the generation of the 4 output audio channels that were used in most of the presentations, which ultimately means generating 176,400 audio samples each second and 88,200 if the configuration is stereophonic instead of quadraphonic. If the audio generation is interrupted even for a small fraction of time, the audience will hear a cut in the audio.

For this reason, in order to adequately manage CPU usage it was more convenient to stage the entire piece in the interaction platform, but enabling a manual function that allows declaring an object idle and disabling the generation of a signal for this object. In a Max/MSP environment, this can be easily done by including each SO within a poly~ object and using the mute message to enable and disable the processing of this object’s signal at discretion. This method was used in Emovere, enabling a button with this purpose in the virtual console inside the interaction platform.

2. Data Size and Resolution

Another aspect that must be considered in order to achieve an optimal performance of a system like this is the size of the data being sent and the impact they have on the final sound product. In some cases where a small difference in the input will cause a great difference in the output, it is necessary to use high resolution data as floating point numbers, but other times (probably most of the time) it is not necessary because a limited resolution is enough to obtain the desired sound result. It is a bit hard to illustrate this aspect, as the output sound variation of each object may depend on one or several inputs and each of them can have different and unique ranges in each SO. However, it is possible to link the treated aspect with the level of output of a console: a difference of 0.1 dBFS will probably not be significant compared to one of 1 dBFS. In general, the compromise between the frequency at which the data comes in and the individual weight of each piece of data must be taken into account. In 10 or 100 fragments of data, it might not be too significant whether they are whole or floating point numbers, but when we are talking about thousands of data fragments per second, the difference is really important and crucial when designing a system like the one used in Emovere.

3. Data Priority

Along with the aforementioned point, it is also necessary to consider the priority of the data. Max/MSP makes a distinction between the data that is part of an audio signal and the data that isn’t, and also in the way in which the objects associated to these types of data operate inside the program. The objects that generate a signal are operated by the DSP of Max/MSP. Those that do not are distributed in high priority and low priority events, and any of these two has a lower priority than the signals unless the Max/MSP configuration is intentionally altered. Once again, this is fairly complex, as there is no one rule that always works for the type of interactions we used in Emovere. Therefore, the priority of the data must be analyzed on a case by case basis. In general terms, the four parameters whose management was key for designing the interaction platform are: Overdrive mode, SIAI (Schedule in Audio Interrupt) and the defer and deferlow objects.

4. Data Routing and Opening of Ports

Just like when someone chooses the shortest way to get to a destination, it is necessary to provide the shortest possible route for each message, whether MIDI or OSC. For the interaction platform, an exclusive section dedicated to managing messages was programed. There are messages that arrive at complex processing sections that would normally not be used in a performance of the piece, which execute functions remotely, such as creating an SO; there are others that must be delivered quickly and with rigorous timing, such as controlling the output level of an SO that is modulated by an extremity of the performers. The section dedicated to managing messages inside the interaction platform will open an exclusive port for high priority messages and another for low priority messages. In turn, high priority messages are distributed to the corresponding SO with no additional mediation of any script or additional lines of code, making it as fast as possible.

5. Sampling Frequency and Audio Vector

In addition to all the aforementioned aspects, there are vitally important parameters related to how the signals are processed inside the interaction platform and ultimately within Max/MSP. As noted above, the sampling frequency will make the difference in the amount of data that must be processed by the system and it must also coincide with the sampling frequency at which the files are stored in the buffer~ object. Otherwise, there might be tuning problems. As for the audio vector, there is an I/O Vector and a Signal Vector. The first parameter has to do with the amount of data that Max/MSP will pack each time in order to conduct the input and output operations, while the second one has to do with the amount of data that Max/MSP will pack in order to perform the operations that take place after the input and before the output. Therefore, there is a compromise between precision and use of processing because the higher the vectors, the lower the processing used. However, there will be a more evident delay between the input and the output than if the values of the parameters are otherwise small, and in this case, there will be a lower delay but a more intensive processing.