Designing Sound Interactions for Biosignals and Dancers

This article presents a summary of the strategies used for designing and composing body-sound interactions for the project Emovere. The document is an excerpt of an article presented in the NIME 2016 Conference, where a more expanded version on this matter can be found.

About Physiological Signals and Their Use as Emotion Indicators

For Emovere, the performers worked with an emotional induction technique called Alba Emoting. This method was developed to help recognize, induce, express and regulate six basic emotions: fear, sadness, eroticism, joy, tenderness and anger. Basically, the training consisted of introducing the performers in the postural, respiratory and facial patterns associated with each emotion. The objective behind this bottom-up method is for the subject to be able to reproduce the corporal state that is connected with each basic emotion, thus helping them induce and experience these emotions. Alba Emoting works with five consecutive intensity levels for each emotion, where level one is the lowest and level five the highest. For example, a level five for the corporal pattern associated with anger requires a higher muscle tone, a more intense facial expression and a more pronounced respiratory cycle than a level one corporal pattern.

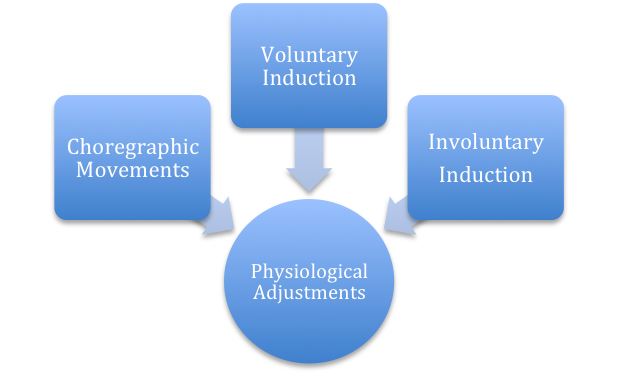

Choreographic Movements: Physical work of the performers that influences physiological adjustments. For example: muscular work when performing a movement that generates EMG activity; increase in the heart rate when engaging in prolonged aerobic exercise.

Voluntary Induction: When adopting one or more corporal patterns of Alba Emoting induction, the performer generates a direct or indirect variation in their physiological configuration. For example: the respiratory pattern influences variations in the heart rate; the muscle tone of anger or fear influences EMG readings.

Involuntary Induction: The performer, when induced or exposed to different emotional states, makes involuntary adjustments in their physiological signals. For example: the stress of a live performance increases the muscle tone, affecting EMG readings; the eroticization of the performer produces an increased heart rate.

Interaction Modes

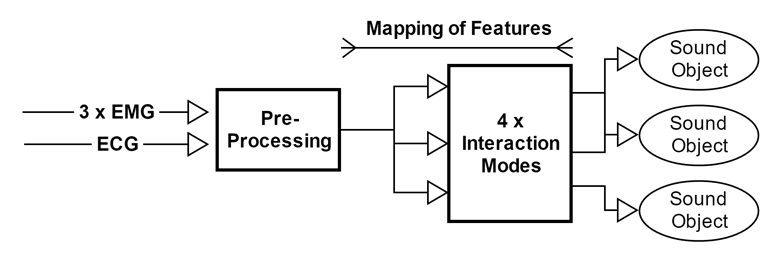

The premise for the project Emovere is that the physiological signals of four performers drive and modulate the piece’s sound environment. In order to achieve this, muscular activity signals were used through electromyogram (EMG) measurements, as well as heart rate signals by electrocardiogram (ECG) measurements. Three EMG sensors were positioned on the body of the dancers, two on their biceps and one on their femoral quadriceps muscle. The ECG sensor was placed over the heart of each performer.

First, these signals are pre-processed in order to eliminate electrical noise and exogenous components, such as mechanisms that may occur when moving the electrodes. It is worth mentioning that when working with the dancers, it was especially difficult to deal with the perspiration of their bodies, as after a prolonged time of physical activity, it was capable of causing a short-circuit between the electrodes of the sensors, leaving the signals unusable. This problem was resolved by modifying the sensors in order to add absorbent material, which allowed extending their usability. But it was also a recurring issue for the choreographic composition because it had to be modified in order to consider this factor in the order of the different sections of the piece.

Once significant features are extracted from the pre-processed signals, such as the heart rate of the electrocardiogram signal or the muscle tension of the electromyogram signal, the parameter mapping phase begins. It consists of linking the behavior of one or several of these features with one or several sound parameters of a Sound Object. We named the way in which this link is designed an Interaction Mode, and for Emovere, four interaction modes were developed.

The interaction modes were designed based on the observation of the movement qualities originated from Alba Emoting and through a heuristic trial and error methodology. Among the elements that motivated this design, the laboratory sessions where the different strategies that emerged from dance or sound proposals were tested and evaluated had a significant influence.

The four interaction modes used in Emovere are presented and described below:

Layers

Inspired by the five levels of intensity that were explored and assimilated by the performers with the Alba Emoting technique, an interaction mode was designed and proposed that was able to integrate the muscle tension of the dancers’ body in order to drive different layers of sound objects. These sound objects were designed to increase their intensity and complexity as the amount of layers involved increase

An extension for this mode was designed in order to facilitate the integration of the four dancers in the scene, allowing the four bodies to be connected to a single sound object. In order to achieve this, the sound object was divided in frequency bands that were assigned to the different performers.

Events

A similar characteristic emerged from the movement qualities produced by two basic emotions: anger and fear. When exercising the inductions of these two emotions, the performers tended to move quickly and abruptly, showing a general increase in their muscle tone. In turn, the electromyogram signal for these behaviors presented an erratic behavior, with energy bursts that were not always correlated with the movement of an arm or a leg. Based on these readings and partly inspired by the flight-or-fight reaction associated with these emotions, an interaction mode was designed that could allow capturing the unpredictability and the energy increase of these emotions.

Voice and Control

One of the elements that appeared during the project’s Alba Emoting and laboratory sessions was the utilization of the performers’ voice as sound material. The emotional induction technique used by the performers involved managing distinctive respiratory patterns for each emotion. In many of these instances, the induction exercises included vocal and respiratory sounds that helped express and deepen the emotion that was being exercised. This motivated the idea of experimenting with vocal improvisations and reading texts under different emotions, which resulted in a spectrum of sound materials that was quite rich in timbre and expressiveness. The aforementioned expanded to two fundamental components of the piece’s sound design: on one hand, we worked with the performer’s studio recorded vocal material in the construction of multiple sound objects for the piece, and on the other, an interaction mode was specifically designed for working with the performers’ live voice.

The voice and control interaction mode was designed so it could capture the performers’ voices on stage and immediately transfer this sound to their bodies. This allowed the performers to modulate their own voices using granular synthesis techniques that disarrange and mix the sound file with their vocal register.

Heartbeat and Biofeedback

The heart rate of the performers measured during the laboratory sessions presented a wide range; it even reached an oscillation between 55 and 130 beats per minute in the case of one of our performers. As noted above, this could not be attributed solely to variations of the emotional state, but also to physical activity and respiratory patterns that directly influence the heart rate. However, the simple exercise of listening to the heartbeat of one performer while self-inducing emotional states turned out to be an attractive experience with great expressive content. The amplification of this intimate internal process, which is (usually) hidden from others, motivated the design of an exclusive interaction design for working with heartbeats and basic emotions. The objective of this mode was to allow the performers to experience different levels of intensity of basic emotions with the amplification of their heartbeats, creating a biofeedback. The heartbeats of the four performers were treated as a single instrument, triggering a series of percussive and sustained sounds. The sound composition focused on working in a way that was more reactive than interactive, as the performers weren’t voluntarily controlling the sound result.